An imaginary number is the square root of a negative number, which of course doesn't make sense; any number multiplied by itself comes out positive. But mathematics is all about laying down axioms and seeing what logically follows. We can just declare that √-1 = i instead of a calculator error.

Alright, you think, but we can't just declare things into existence. What does an imaginary number even mean in the real world? You can have 3 apples, or maybe even -3 apples if you owe someone, but 3i apples has no concrete, physical interpretation, right?

Well, it turns out that by allowing complex numbers—a set that includes both real and imaginary numbers—we open up a new space for doing mathematics and physics. In fact, if we want to explain the bewildering diversity of chemical elements or the solidity of matter, we have to explore this imaginary space. Could anything be more concrete?

Take a look and you'll see...

Before we delve into the physics, let's make sure we have a little intuition about complex numbers. The imaginary unit, i, is the square root of -1. Just based on that, we see imaginary numbers cycle:

i*i = -1, because that's our definition

(i*i)*i = -1*i = -i

(i*i)*(i*i) = -1*-1 = 1

And (i*i*i*i)*i = 1*i = i again

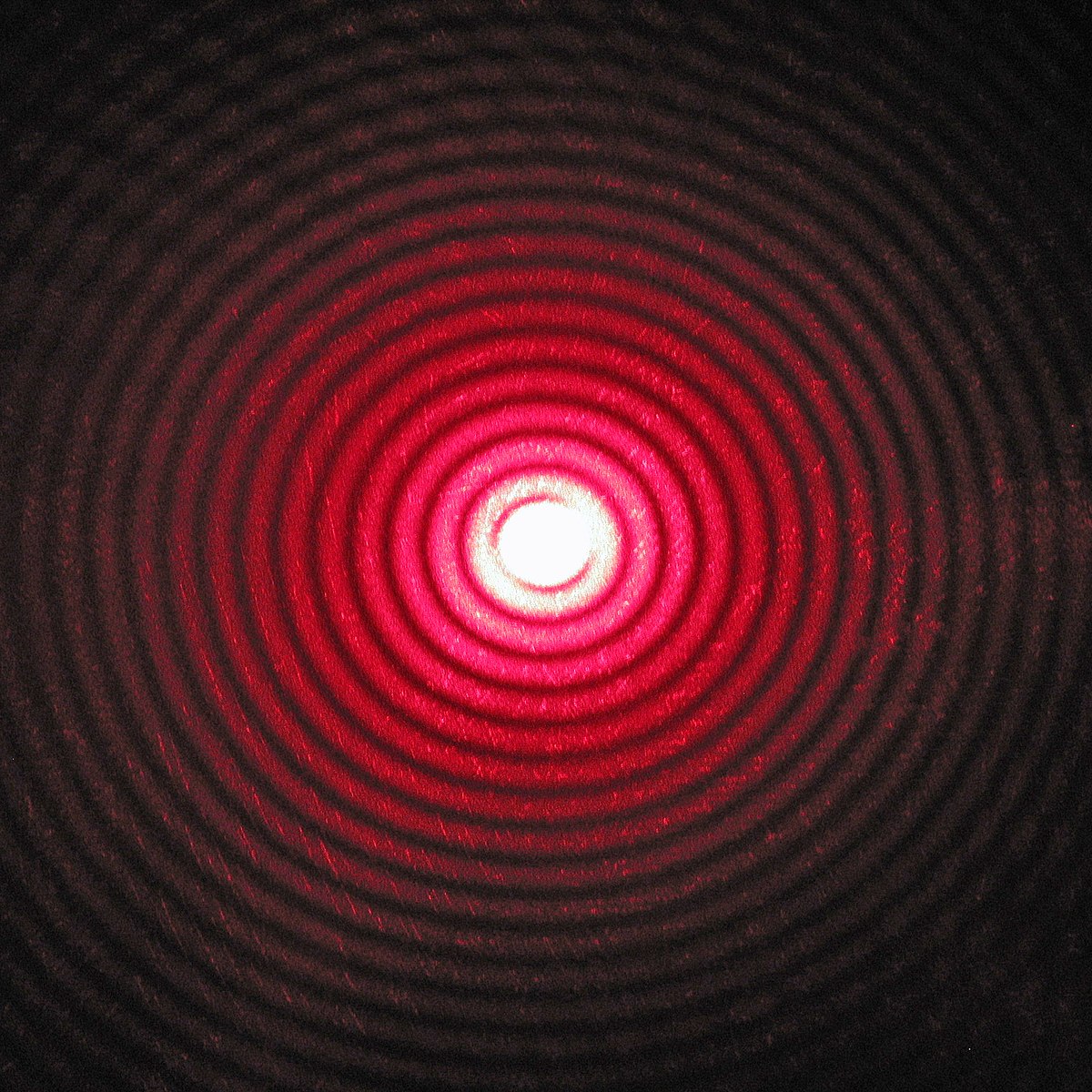

This cycle lends itself to a neat geometric interpretation. Instead of the humdrum xy-plane, we can imagine a complex plane like this:

|

| By Svjo [CC BY-SA 4.0], from Wikimedia Commons |

It might look like we've only renamed a plain plane, but this space gives us flexibility the real numbers lack. Real numbers sometimes fall down on the job when you’re trying to solve polynomial equations. But if you say i is a root of -1, you can always find a complex number that does the trick. Geometrically, this lets us access points on the complex plane through simple multiplication, without having to rely on more cumbersome machinery.

Okay, finding polynomial roots probably sounds pretty boring, so we're not going to dwell on that. We'll mostly think in terms of complex rotation and how that permits us to peak into weird, non-Euclidean spaces where up and down no longer work the way they should. But know that in the background, these imaginary roots are letting us do a bunch of linear algebra by providing solutions to otherwise unsolvable equations.

We'll begin with a spin...

Let's turn back to physics. Explaining how the properties of chemical elements—the gregariousness of carbon, the aloofness of neon—arise from quantum mechanics goes like this: the protons and neutrons of an atom are squeezed into a tiny nucleus while the electrons whizz by in concentric orbital shells. How “filled” the outermost shell is (mostly) determines the chemical properties of an element. So whatever keeps these negative nancies from clumping together is responsible for, well, basically all macroscopic structure.

The culprit is the Pauli exclusion principle, which says that particles with half-integer spin (electrons) cannot occupy the same quantum state. Spin is intrinsic angular momentum, measured in units of ħ. If you measure the spin of an electron along some axis, you get either +1/2 (referred to as spin up) or -1/2 (upside down—spin down), with no other possible outcomes.

To keep track of the spin state of an electron, we can write a wave function that looks like this:

|↑⟩

Flip the electron upside down and the spin state is:

|↓⟩

Then flip it back right side up and you get:

-|↑⟩

Wait, what? We seemed to have gained a minus sign somehow. In fact, you have to rotate an electron a full 720° to cycle back to the state you started with. The minus sign doesn't matter much in measurement because anything we observe in quantum mechanics involves the square of the wave function, but it being in the math is pivotal.

Say a transporter accident duplicates Kirk and the two end up fighting.

|

| Credit: Paramount Pictures and/or CBS Studios |

|W⟩|L⟩

Or vice versa:

|L⟩|W⟩

Each one will scream, "Spock... it’s... me!" but there's no evil mustache to differentiate them. With identical quantum particles, this symmetry of exchange is mathematically equivalent to taking one particle and flipping it around 360°; in both cases you end up with observationally indistinguishable states.

But there are still two outcomes. Whenever we're dealing with multiple possibilities in quantum mechanics, it's time for you-know-who and his poor cat. Just as the cat can be in a superposition of alive and dead, a Kirk particle can be in a superposition of winning and losing.

Nothing weird happens when you mix and match bosons (particles with integer spin like photons). They exchange symmetrically and their superposition looks like this:

|W⟩|L⟩ + |L⟩|W⟩

But electrons (and other half-integer fermions) are antisymmetric; a 360° flip gives us that minus sign. So their superposition is:

|W⟩|L⟩ - |L⟩|W⟩

As both sides of this expression are indistinguishable, subtracting one from the other equals 0. Any place where the wave function is 0, we have a 0% chance of finding a particle. So two electrons will never end up in a fight in the first place. (Kirk, then, is clearly a boson.) Replace "fight" with "spin up state in the 1s shell of a hydrogen atom" and you've got the beginnings of chemistry and matter.

What we'll see will defy explanation...

Okay, so how do we make sense of the weird minus sign a rotated electron acquires? This perplexing behavior originates with their 1/2 spin, which we can only understand if we venture back into the world of imaginary numbers, to a place called Hilbert space.

Physicists discovered that electrons were spin-1/2 as a result of the Stern-Gerlach experiment, where Stern and Gerlach sent silver atoms (and their attendant electrons) through a magnetic field. Spin up particles were deflected one direction, spin down particles a slightly different direction. That there were only two possible values along a given axis was weird enough, but follow-up experiments revealed even stranger behavior.

|

| By Theresa Knott from en.wikipedia - Own work, CC BY-SA 3.0, Link |

Experiment says no. This is a little weird. It means +1/2 spin doesn't overlap at all with -1/2 spin (positively or negatively). That should only be the case for vectors at right angles to each other. Somehow, these up and down arrows behave as if they're orthogonal.

Say we've been measuring spin along the z-axis until now. We can set up a second S-G apparatus that measures along x (or y) and then send |↑z⟩ electrons through that. The z- and x-axes are at right angles, so there should definitely be no overlap. But electrons are capricious; they split evenly between |↑x⟩ and |↓x⟩, even though an arrow only pointing up clearly has no component in any other direction.

A pattern is emerging here. The 180° separation between |↑⟩ and |↓⟩ acts like a right angle. Right angles act like they’re only separated by 45°. And a full 360° rotation just turns a vector backward, giving it the minus sign at the center of all this. All our angles are halved. The space electrons inhabit is weird, as if someone tried to grab hold of all the axes and pull them together like a bouquet of flowers.

Try to imagine that if you can, but don't worry if you can't; we're not describing a Euclidean space. You can sort of squeeze the z- and x-axes closer together, but any attempt to bring the y-axis in while also maintaining the 90° separation between any up and down and 45° separation between any right angle just won't work.

The only way we can fit the y-axis in there is to deploy a new degree of rotation distinct from Euclidean directions. That sounds like a job for the complex plane. In fact, our inability to properly imagine this space is directly analogous to not being able to find real roots for a system of equations, which as we know is where complex numbers shine. Vectors that are too close in real space can be rotated away from each other in complex space to give us the properties we need.

From this mathematical curiosity—a space where rotation and orthogonality are governed by complex numbers—we find an accurate description of the subatomic particles that serve as matter's scaffolding. Electrons are best thought of not as tiny, spinning balls of charge but as wave functions rotating through a complex 2D vector space.

So what does it mean to have 3i apples? Nothing. But what does it mean to have 3 apple juice? The physical reality of complex numbers only manifests at the quantum level. To many philosophers, this indispensable presence demands ontological commitment. This is a way of saying, "Well, I guess if anything is real, that is." And how are we to say otherwise? Complex numbers might come from a world of pure imagination, but they're necessary for describing this world; shouldn't that count for something?

|

| Credit: Warner Bros. for this picture and the song lyrics. |